- Oct 11, 2025

Share this post on:

Developed by Anthropic, Model Context Protocol (MCP) is a standardization protocol that enables large language models to connect AI assistants, external tools, databases, and APIs via a standardized interface. Model Context Protocol addresses a fundamental challenge in AI development, which is enabling AI models to access real-time, relevant external information and perform actions beyond their static training data.

What is Model Context Protocol (MCP)?

MCP enables AI-powered applications such as chatbots, IDE assistants, or custom AI agents to request data, execute functions, and interact with external systems in a secure, structured, and standardized way.

Anthropic describes MCP as akin to a "USB-C port for AI applications," highlighting its goal to be a common interface that any AI model can use to connect to a wide variety of external tools and data sources. By doing so, MCP allows AI models to understand contextual information and take real actions in external applications, such as updating records in a CRM, retrieving files from cloud storage, or triggering workflows in business systems.

Why Was MCP Created?

Before MCP, AI developers faced an "N×M" integration problem, which meant having to build custom connectors for every combination of AI model and external system, which was inefficient and fragmented. Existing solutions like OpenAI’s function-calling API or ChatGPT plug-ins were vendor-specific and lacked a universal standard.

MCP was introduced to solve this by providing:

A standardized, open protocol that any AI system can implement.

A secure, flexible framework to connect AI models with diverse external data and tools.

A way to break down AI silos and enable composable AI workflows across platforms and organizations.

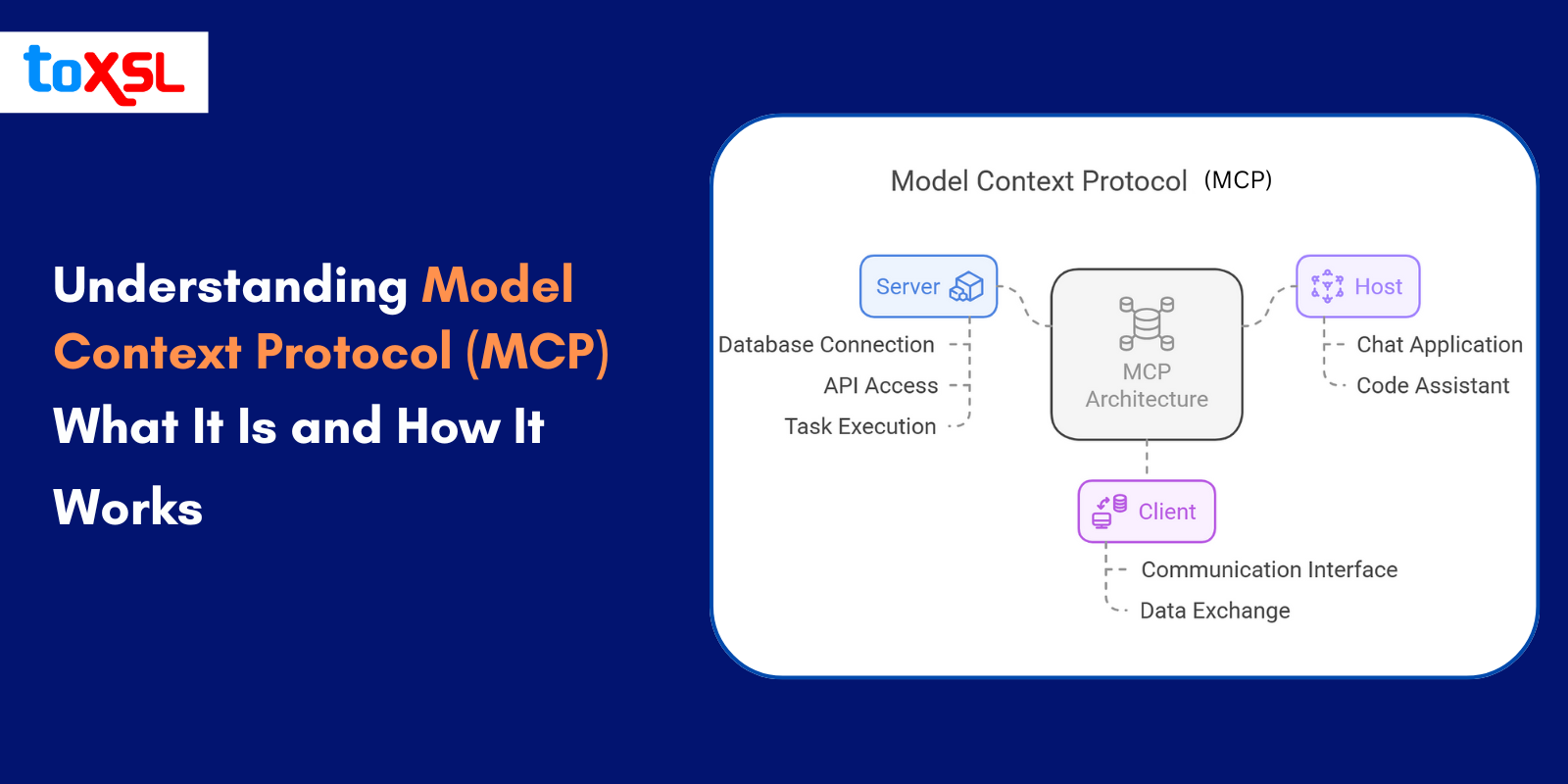

Model Context Protocol: Architecture

MCP follows a simple client-server architecture.

MCP Host: When an AI application receives user requests, it gets access to context via the MCP. This can be IDEs such as Cursor or Claude Desktop. MCP host has orchestration logic, connecting each client to a server.

MCP Client: Communication between the host and server in the MCP ecosystem goes through a client. This client exists within the host and converts user requests into a structured format that the open protocol can process. However, numerous clients can exist with a single MCP host, but each client has a 1:1 relationship with an MCP server.

MCP Server: An MCP server is a service that helps AI models understand what users want and then take the right actions by connecting to different external tools. For example, MCP servers can link up with popular platforms like Slack, GitHub, Git, Docker, or even web search engines. These servers are usually open-source projects you can find on GitHub, written in languages like C#, Java, TypeScript, Python, and others. They provide the AI with special tools to access and work with these platforms.

Most of these GitHub projects include step-by-step tutorials to help developers set up and use the MCP servers easily. Further, MCP servers can connect AI models running on platforms like IBM or OpenAI to the MCP SDK. This creates a reusable service that clients can use as a common “chat tool” to let AI interact smoothly with different apps and services. This version keeps things clear and approachable, making it easy to understand how MCP servers work and why they’re useful. According to Anthropic documentation, Model Context Protocol servers expose data through:

Resources: These let the AI get information from databases or other sources, whether inside your company or outside. They provide data but don’t perform any actions or calculations.

Tools: These are more interactive. They allow the AI to do things like run calculations or fetch information by making API calls. In other words, tools can perform tasks that have real effects.

Prompts: These are reusable templates or workflows that help the AI and the MCP server communicate smoothly and efficiently.

How does MCP work?

MCP is developed around a client-server architecture, connecting AI apps to external data sources and tools in a standardized way. Here’s how the process typically flows:

Initialization: When the host application starts, it creates one or more MCP clients. Each client connects to its assigned MCP server and they perform a handshake, exchanging information about supported protocol versions and capabilities.

Discovery: The client asks the server what it can do and what tools, resources, and prompts are available. The server replies with a list and descriptions, so the client knows how to interact with it.

Context Provision: The host application can now make the server’s resources and prompts available to the AI model. For example, it might provide data from a database or a reusable workflow template to help the AI understand the context better.

Invocation: When the AI model needs to act, it signals the host. The host tells the client to send a request to the appropriate MCP server to execute that action.

Execution: The server receives the request, performs the required operation, and prepares the results.

Response: The server sends the results back to the client.

Completion: The client passes the results to the host, which incorporates this fresh information into the AI model’s context. The AI can then generate an informed response to the user, enriched with up-to-date external data.

MCP supports different ways to transport these messages, including local communication via standard input/output and remote communication over HTTP with streaming capabilities. This flexibility makes MCP adaptable for various deployment scenarios.

Benefits of Implementing Model Context Protocol (MCP)

Implementing Model Context Protocol (MCP) can be of great benefit. Let us shed some light on the benefits of MCP:

1. Simplified Development: Developers do not have to rewrite the custom code for every integration and use the same code multiple times.

2. Flexibility: Model Context Protocols are flexible. You can easily switch AI models or tools without any complicated configuration.

3. Real-time Responsiveness: MCP connections remain active, and enable real-time context updates and interactions.

4. Security and Compliance: It comes with built-in access controls and security practices.

5. Scalability: It is easy to connect to another MCP server as the AI ecosystem grows.

Conclusion:

MCP is a rising LLM tool integration that will mature and transform with time. With the increase in technical challenges, MCP servers develop the standard that in turn will improve MCPs. ToXSL Technologies is a leading Generative AI services provider company, renowned for helping businesses integrate AI into their business and make operations smoother. Contact our team today and take business to the next level. Request a quote today.